CI/CD using Gitlab, AWS, EKS, Kubernetes, and ECR

Introduction: We recently deployed an EKS Cluster for our Streaming App. And we have been using GitLab for quite some time. We were using Ansible roles to deploy our applications in staging and production environments. But we wanted to try something native to Gitlab CI/CD.

Assumptions:

_1. You have a basic understanding of all technologies included in the title of this article.

- You have already deployed applications in your Kubernetes cluster as deployment and they are working fine.

- CI/CD will be triggered only if your commit is_ tagged with a version number.

Gitlab Kubernetes Integration

Okay so let’s start first configure GitLab integration with Kubernetes Cluster. I simply followed this documentation: https://docs.gitlab.com/ee/user/project/clusters/add_remove_clusters.html#existing-eks-cluster

Install Helm Tiller and GitLab Runner from the same screen.

Remember we added this cluster at Group Level. You can choose to add at the Project Level as well.

IAM User

Create an IAM user in AWS for accessing ECR. Let’s say gitlab this user will have only Programmatic access. While creating user we will also create a group in the process. This group will have the following permissions:

1

2

3

4

5

AmazonEKSWorkerNodePolicy

AmazonEC2ContainerRegistryFullAccess

AmazonEC2ContainerRegistryReadOnly

AmazonEC2ContainerServiceFullAccess

AmazonEKS_CNI_Policy

At the end of the above process, we will be provided with a credentials CSV file which we will use later on in creating group-level variables for AWS authentication. This authentication will include authentication to ECR and EKS Cluster.

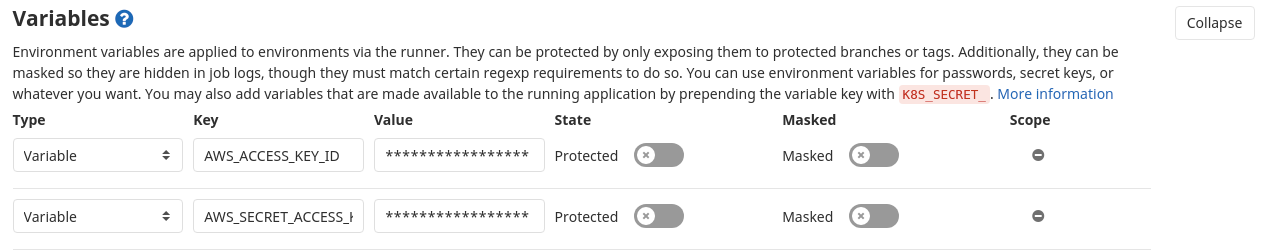

Variables

Once we are done with this we can proceed to add variable at group-level.

Following two variables need to be added for AWS Authentication:

1

2

AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY

K8S Access

Configure K8S to allow deployments from gitlab user which we created above. We will need to modify our aws-auth ConfigMap defined in kube-system namespace. We will need to add mapUsers: section to this file.

1

kubectl -n kube-system edit cm aws-auth

Add following section

1

2

3

4

5

mapUsers: |

- userarn: arn:aws:iam::your-aws-accoun-id:user/gitlab

username: gitlab

groups:

- system:masters

The sample aws-auth file is here.

CI/CD

Now we will get to a project where we want to configure our CI/CD.

Create a gitlab-ci.yml you may follow this template.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

stages:

- build

- staging

- production

variables:

DEPLOYMENT_NAME: <your-project-name>

DOCKER_REGISTRY: <your-ecr-registty>/$DEPLOYMENT_NAME

build:

stage: build

image: docker:19.03.1

variables:

DOCKER_DRIVER: overlay

DOCKER_HOST: tcp://localhost:2375

DOCKER_TLS_CERTDIR: ""

services:

- docker:19.03.1-dind

before_script:

- docker info

- apk add --no-cache curl jq python py-pip

- pip install awscli

script:

- $(aws ecr get-login --no-include-email --region <your-aws-region>)

- docker build -t $DOCKER_REGISTRY:$CI_COMMIT_TAG .

- docker push $DOCKER_REGISTRY:$CI_COMMIT_TAG

only:

- tags

.kubectl_config: &kubectl_config

- aws eks --region <your-aws-region> update-kubeconfig --name <your-aws-cluster-name>

staging:

image: docker.io/sulemanhasib43/eks:latest

stage: staging

variables:

K8S_NAMESPACE: <your-desired-k8s-namespace>

before_script: *kubectl_config

script:

- kubectl version

- kubectl -n $K8S_NAMESPACE patch deployment $DEPLOYMENT_NAME -p '{"spec":{"template":{"spec":{"containers":[{"name":"'"$DEPLOYMENT_NAME"'","image":"'"$DOCKER_REGISTRY:$CI_COMMIT_TAG"'"}]}}}}'

only:

- tags

Finally, as soon we push/update the filegitlab-ci.yml in our git repo, Build and subsequent deployment to staging will be triggered. You can configure production deployment as well with same configuration just change the environment variables.

Hope this helps. Happy DevOps. :)