Understanding the Model Context Protocol (MCP): The USB-C for AI Applications

Introduction to MCP

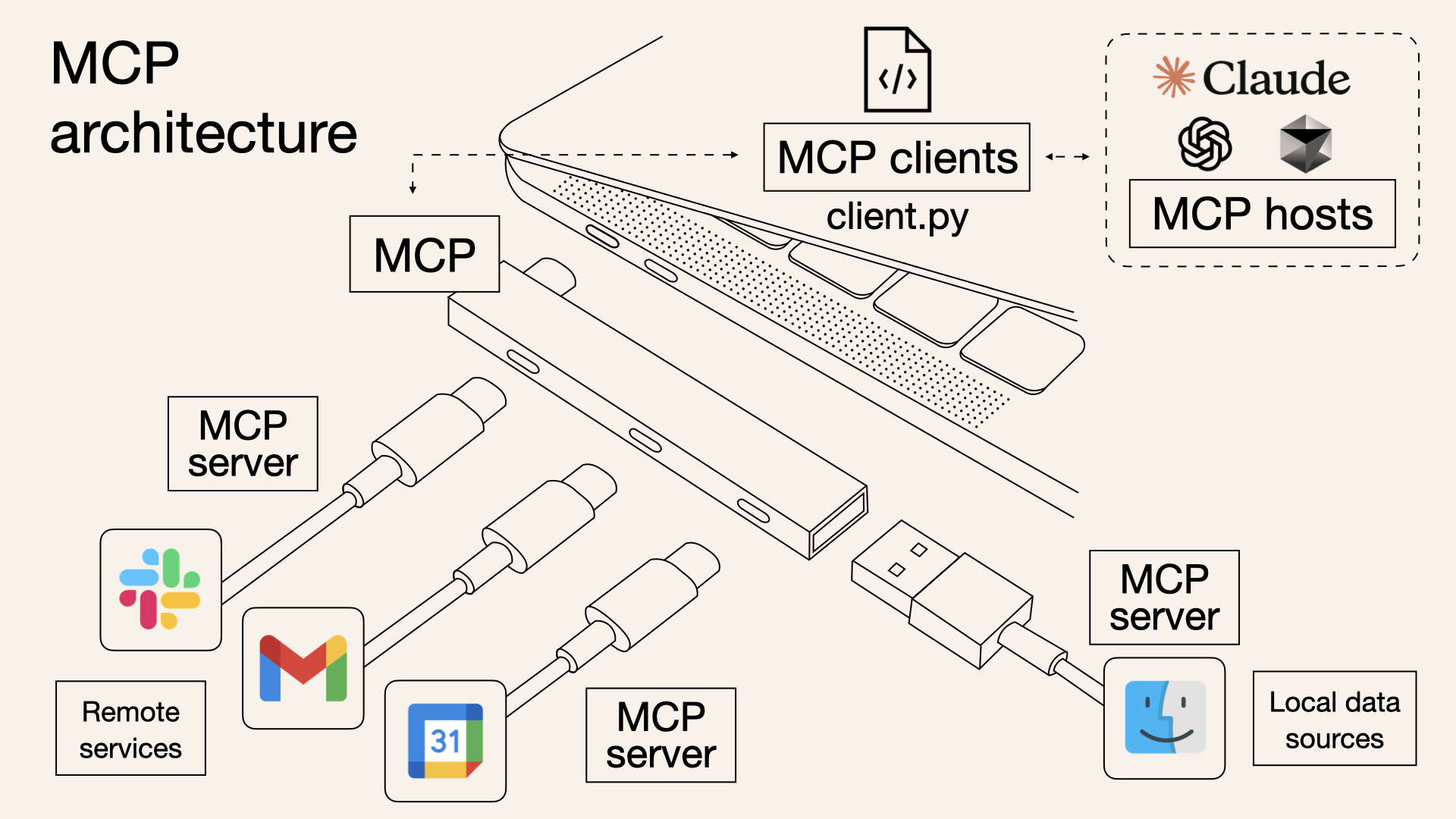

In the rapidly evolving landscape of artificial intelligence (AI), efficiency and interoperability are paramount. Enter the Model Context Protocol (MCP) — a standardized open protocol designed to streamline how applications interact with Large Language Models (LLMs).

MCP functions like a USB-C port for AI applications. Just as USB-C simplifies connections between devices and peripherals, MCP standardizes the connection between AI models and data sources, ensuring smoother and more reliable interactions. Learn more about MCP.

How MCP Works

MCP establishes a framework for applications to seamlessly provide context to LLMs. Context, in this case, refers to the data and instructions that enable LLMs to generate relevant and accurate responses. By adhering to this standard, developers can avoid bespoke integrations and instead leverage a universal approach to integrate models with their applications.

Integrating MCP with Open WebUI

One of MCP’s key features is its compatibility with platforms like Open WebUI. Through the MCP-to-OpenAPI proxy server (mcpo) provided by Open WebUI, developers can harness the power of MCP for OpenAPI-compliant applications. However, it’s important to note that this integration has limitations: most local models are not compatible with MCP. Learn more about Open WebUI and MCP.

Challenges of Running Local Models with MCP

While local models have their benefits, they come with significant challenges, especially when attempting to integrate MCP. Here’s what you might encounter when running models locally:

Performance Impact

- Responses are 5-10 times slower compared to cloud-based services.

- High utilization of system resources, including CPU, GPU, and RAM, can make your computer less responsive.

Tool Reliability Issues

- Code analysis, file operations, and browser automation may be unreliable.

- Terminal commands fail more frequently, and complex tasks often encounter breakdowns.

Hardware Requirements

To even consider running local models, you’ll need:

- A modern GPU with at least 8GB VRAM (e.g., RTX 3070 or better).

- 32GB or more of system RAM.

- Fast SSD storage and robust cooling solutions.

For many users, these limitations make cloud-based solutions a more practical choice. Read more about local model performance.

MCP and Claude Desktop

MCP shines when paired with Claude Desktop, a platform developed by Anthropic, the same company behind MCP. Claude AI, powered by Anthropic’s advanced Sonnet LLM, integrates seamlessly with MCP to deliver top-tier performance. This synergy ensures reliable, high-quality outputs, making Claude Desktop a preferred choice for MCP-powered workflows.

Best Development Experience: Cline and VSCode

For developers seeking robust AI integration in their workflows, Cline offers an unparalleled experience in Visual Studio Code (VSCode). Cline’s extension for VSCode allows developers to utilize both cloud-based and local LLMs effortlessly. With Cline, you can:

- Access powerful AI tools directly within VSCode.

- Seamlessly integrate AWS Bedrock using AWS Profiles. Learn how to install Cline and set up AWS Bedrock.

MCP Servers

The Model Context Protocol (MCP) offers a diverse array of servers designed to extend the capabilities of Large Language Models (LLMs) by enabling secure and controlled access to various tools and data sources. The official MCP repository on GitHub provides a comprehensive collection of these servers, categorized into reference implementations, official integrations, and community-contributed servers.

Reference implementations serve as foundational examples demonstrating core MCP features and SDK usage. These include servers for interacting with file systems, databases like PostgreSQL and SQLite, cloud storage services such as Google Drive, and development tools including Git, GitHub, and GitLab. Additionally, there are servers for web and browser automation (e.g., Puppeteer), productivity and communication platforms (e.g., Slack, Google Maps), and AI-specific tools like EverArt for image generation and Sequential Thinking for dynamic problem-solving.

Official integrations are maintained by companies to provide production-ready MCP servers tailored to their platforms. Notable examples include integrations with Axiom for log analysis, Browserbase for cloud-based browser automation, Cloudflare for resource management, and Stripe for payment processing. These integrations enable seamless interaction between LLMs and enterprise-grade services, enhancing the practical applications of MCP in various industries.([GitHub][2], [modelcontextprotocol.info][3])

Community-contributed servers further expand MCP’s versatility, covering a wide range of applications. These include servers for managing Docker containers and Kubernetes clusters, interacting with project management tools like Linear and Todoist, accessing music services such as Spotify, and connecting to various databases including MySQL, MSSQL, and Snowflake. While these community servers showcase the adaptability of MCP, it’s important to note that they are untested and not officially endorsed, and thus should be used with caution.

For a detailed overview and access to the full list of available MCP servers, you can visit the official repository here: https://github.com/modelcontextprotocol/servers?tab=readme-ov-file.

Conclusion

The Model Context Protocol is revolutionizing how applications connect with AI models. By providing a standardized approach, MCP simplifies development, enhances compatibility, and unlocks new possibilities for integrating AI into diverse workflows. Whether you’re leveraging the cutting-edge features of Claude Desktop, navigating the challenges of local models, or integrating AI into VSCode with Cline, MCP ensures a smoother and more efficient process for everyone.